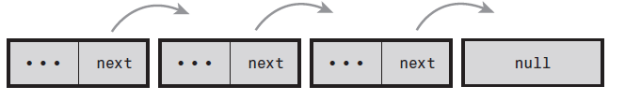

A common programming pattern in any operating system kernel is producer and consumer. In this pattern, a producer creates data—say, networking packets to be processed or error messages to be read —while a consumer, in turn, reads, processes, or otherwise consumes the data. Often the easiest way to implement this pattern is with a queue.The producer pushes data onto the queue and the consumer pulls data off the queue.The consumer retrieves the data in the order it was enqueued.That is, the first data on the queue is the first data off the queue. For this reason, queues are also called FIFOs, short for first-in, first-out. See the below figure for an example of a standard queue.

The Linux kernel’s generic queue implementation is called kfifo and is implemented in <kernel/kfifo.c> and declared in <linux/kfifo.h>.

kfifo

Linux’s kfifo works like most other queue abstractions, providing two primary operations:enqueue (in) and dequeue (out).The kfifo object maintains two offsets into the queue: an in offset and an out offset.The in offset is the location in the queue to which the next enqueue will occur.The out offset is the location in the queue from which the next dequeue will occur.The out offset is always less than or equal to the in offset. It wouldn’t make sense for it to be greater; otherwise, you could dequeue data that had not yet been enqueued

The enqueue (in) operation copies data into the queue, starting at the in offset.When complete, the in offset is incremented by the amount of data enqueued.The dequeue (out) operation copies data out of the queue, starting from the out offset.When complete, the out offset is incremented by the amount of data dequeued.When the out offset is equal to the in offset, the queue is empty: No more data can be dequeued until more data is enqueued.When the in offset is equal to the length of the queue, no more data can be enqueued until the queue is reset.

kfifo declaration

58struct __kfifo { 59 unsigned int in; 60 unsigned int out; 61 unsigned int mask; 62 unsigned int esize; 63 void *data; 64};

Creating a Queue

To use a kfifo, you must first define and initialize it. as with most kernel objects, you can do this dynamically or statically.The most common method is dynamic.

319/** 320 * kfifo_alloc - dynamically allocates a new fifo buffer 321 * @fifo: pointer to the fifo 322 * @size: the number of elements in the fifo, this must be a power of 2 323 * @gfp_mask: get_free_pages mask, passed to kmalloc() 324 * 325 * This macro dynamically allocates a new fifo buffer. 326 * 327 * The numer of elements will be rounded-up to a power of 2. 328 * The fifo will be release with kfifo_free(). 329 * Return 0 if no error, otherwise an error code. 330 */ 331#define kfifo_alloc(fifo, size, gfp_mask) \ 332__kfifo_int_must_check_helper( \ 333({ \ 334 typeof((fifo) + 1) __tmp = (fifo); \ 335 struct __kfifo *__kfifo = &__tmp->kfifo; \ 336 __is_kfifo_ptr(__tmp) ? \ 337 __kfifo_alloc(__kfifo, size, sizeof(*__tmp->type), gfp_mask) : \ 338 -EINVAL; \ 339}) \ 340)

What is gfp_mask ?

The low-level kernel memory allocation functions take a set of flags describing how that allocation is to be performed. Among other things, these GFP_ (“get free page”) flags control whether the allocation process can sleep and wait for memory, whether high memory can be used, and so on. See this article for the full set.

If you want to allocate the buffer yourself, you can:

354/** 355 * kfifo_init - initialize a fifo using a preallocated buffer 356 * @fifo: the fifo to assign the buffer 357 * @buffer: the preallocated buffer to be used 358 * @size: the size of the internal buffer, this have to be a power of 2 359 * 360 * This macro initialize a fifo using a preallocated buffer. 361 * 362 * The numer of elements will be rounded-up to a power of 2. 363 * Return 0 if no error, otherwise an error code. 364 */ 365#define kfifo_init(fifo, buffer, size) \ 366({ \ 367 typeof((fifo) + 1) __tmp = (fifo); \ 368 struct __kfifo *__kfifo = &__tmp->kfifo; \ 369 __is_kfifo_ptr(__tmp) ? \ 370 __kfifo_init(__kfifo, buffer, size, sizeof(*__tmp->type)) : \ 371 -EINVAL; \ 372})

Statically declaring a kfifo is simpler, but less common:

124/** 125 * DECLARE_KFIFO - macro to declare a fifo object 126 * @fifo: name of the declared fifo 127 * @type: type of the fifo elements 128 * @size: the number of elements in the fifo, this must be a power of 2 129 */ 130#define DECLARE_KFIFO(fifo, type, size) STRUCT_KFIFO(type, size) fifo 131 132/** 133 * INIT_KFIFO - Initialize a fifo declared by DECLARE_KFIFO 134 * @fifo: name of the declared fifo datatype 135 */ 136#define INIT_KFIFO(fifo) \ 137(void)({ \ 138 typeof(&(fifo)) __tmp = &(fifo); \ 139 struct __kfifo *__kfifo = &__tmp->kfifo; \ 140 __kfifo->in = 0; \ 141 __kfifo->out = 0; \ 142 __kfifo->mask = __is_kfifo_ptr(__tmp) ? 0 : ARRAY_SIZE(__tmp->buf) - 1;\ 143 __kfifo->esize = sizeof(*__tmp->buf); \ 144 __kfifo->data = __is_kfifo_ptr(__tmp) ? NULL : __tmp->buf; \ 145})

This creates a static kfifo named name with a queue of size bytes.As before, size must be a power of 2.

There are various functions on kfifo . please see here .

Mostly used functions are

Enqueuing Data

When your kfifo is created and initialized, enqueuing data into the queue is performed via the kfifo_in() function:

unsigned int kfifo_in(struct kfifo *fifo, const void *from, unsigned int len);

This function copies the len bytes starting at from into the queue represented by fifo. On success it returns the number of bytes enqueued. If less than len bytes are free in the queue, the function copies only up to the amount of available bytes.Thus the return value can be less than len or even zero, if nothing was copied.

Dequeuing Data

When you add data to a queue with kfifo_in(), you can remove it with kfifo_out():

unsigned int kfifo_out(struct kfifo *fifo, void *to, unsigned int len);

This function copies at most len bytes from the queue pointed at by fifo to the buffer pointed at by to. On success the function returns the number of bytes copied. If less than len bytes are in the queue, the function copies less than requested. When dequeued, data is no longer accessible from the queue.This is the normal usage of a queue, but if you want to “peek” at data within the queue without removing it, you can use kfifo_out_peek():

unsigned int kfifo_out_peek(struct kfifo *fifo, void *to, unsigned int len,unsigned offset);

This works the same as kfifo_out(), except that the out offset is not incremented,and thus the dequeued data is available to read on a subsequent call to kfifo_out().The parameter offset specifies an index into the queue; specify zero to read from the head of the queue, as kfifo_out() does.

Obtaining the Size of a Queue

To obtain the total size in bytes of the buffer used to store a kfifo’s queue, call kfifo_size():

static inline unsigned int kfifo_size(struct kfifo *fifo);

In another example of horrible kernel naming, use kfifo_len() to obtain the number of bytes enqueued in a kfifo:

static inline unsigned int kfifo_len(struct kfifo *fifo);

To find out the number of bytes available to write into a kfifo, call kfifo_avail():

static inline unsigned int kfifo_avail(struct kfifo *fifo);

Finally, kfifo_is_empty() and kfifo_is_full() return nonzero if the given kfifo is

empty or full, respectively, and zero if not:

static inline int kfifo_is_empty(struct kfifo *fifo);

static inline int kfifo_is_full(struct kfifo *fifo);

Resetting and Destroying the Queue

To reset a kfifo, jettisoning all the contents of the queue, call kfifo_reset():

static inline void kfifo_reset(struct kfifo *fifo);

To destroy a kfifo allocated with kfifo_alloc(), call kfifo_free():

void kfifo_free(struct kfifo *fifo);

If you created your kfifo with kfifo_init(), it is your responsibility to free the associated

buffer. How you do so depends on how you created it.

Example Queue Usage

With these interfaces under our belt, let’s take a look at a simple example of using a kfifo.

Assume we created a kfifo pointed at by fifo with a queue size of 8KB.We can now

enqueue data onto the queue. In this example, we enqueue simple integers. In your own

code, you will likely enqueue more complicated, task-specific structures. Using integers in

this example, let’s see exactly how the kfifo works:

unsigned int i;

/* enqueue [0, 32) to the kfifo named ‘fifo’ */

for (i = 0; i < 32; i++)

kfifo_in(fifo, &i; sizeof(i));

The kfifo named fifo now contains 0 through 31, inclusive.We can take a peek at the

first item in the queue and verify it is 0:

unsigned int val;

int ret;

ret = kfifo_out_peek(fifo, &val, sizeof(val), 0);

if (ret != sizeof(val))

return -EINVAL;

printk(KERN_INFO “%u\n”, val); /* should print 0 */

To dequeue and print all the items in the kfifo, we can use kfifo_out():

/* while there is data in the queue … */

while (kfifo_avail(fifo)) {

unsigned int val;

int ret;

/* … read it, one integer at a time */

ret = kfifo_out(fifo, &val, sizeof(val));

if (ret != sizeof(val))

return -EINVAL;

printk(KERN_INFO “%u\n”, val);

}

This prints 0 through 31, inclusive, and in that order. (If this code snippet printed the

numbers backward, from 31 to 0, we would have a stack, not a queue.)

References

1) Linux.Kernel.Development.3rd.Edition

/Suresh